Big Data Needs Data Scientists

The United States alone faces a shortage of 140,000 to 190,000 people with analytical expertise and 1.5 million managers and analysts with the skills to understand and make decisions based on the analysis of big data

Quantitative

computer science

data modelling

business domain

visualization

Data driven

statistics

understanding-communication

Technical

domain

skeptical -what

QHD -quantitative,hacking,domain

Knowledge of people -Deep talent

Knowledge of people -Deep talent

statistician /mathematician --more quants ,less technical

traditional research -more business ,more quants,less techie

Business intelligence - more tech,more business ,less quants

Business intelligence - more tech,more business ,less quants

Data scientists -More Technical ,more business, more Quants

phase 1: statistics –functional -methods,process,theorem ,techniques

phase 2: big data -

phase 3: bigdata analytics using R

phase 4 : machine learning,nlp

phase 5 : predictive,competitive intelligence

4 A's

Data architecture

Data acquisition

Data analysis

Data archiving.

Data architecture -design of your sw/hw system to read and store the for the business ,data origin and how it suppport the various people of business

A data scientist would help the system architect by providing input on how the data would need to be routed and organized to support the analysis, visualization, and presentation of the data to the appropriate people.

Measurement

Adv of microscope for the biologist,chemist

Increase the productivity and profitability

Data driven

Charts ,graph show already decided things

But its experiment for analyst to choose various option from handling data

Skills to analyse and collect different data –non financial and non numeric .

Customer experience,emotions,likes ..

Landscape

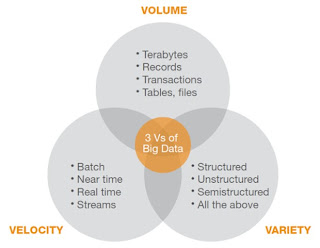

Bigdata –not only volume ,its nano data , grains of data

Lead to bi to view the same data in different ways .

Conceive the data for advantage , break the opponent statistics in seconds to succeed in the game

.png)

.gif)

.png)

.png)

.png)